Evaluation of OPE¶

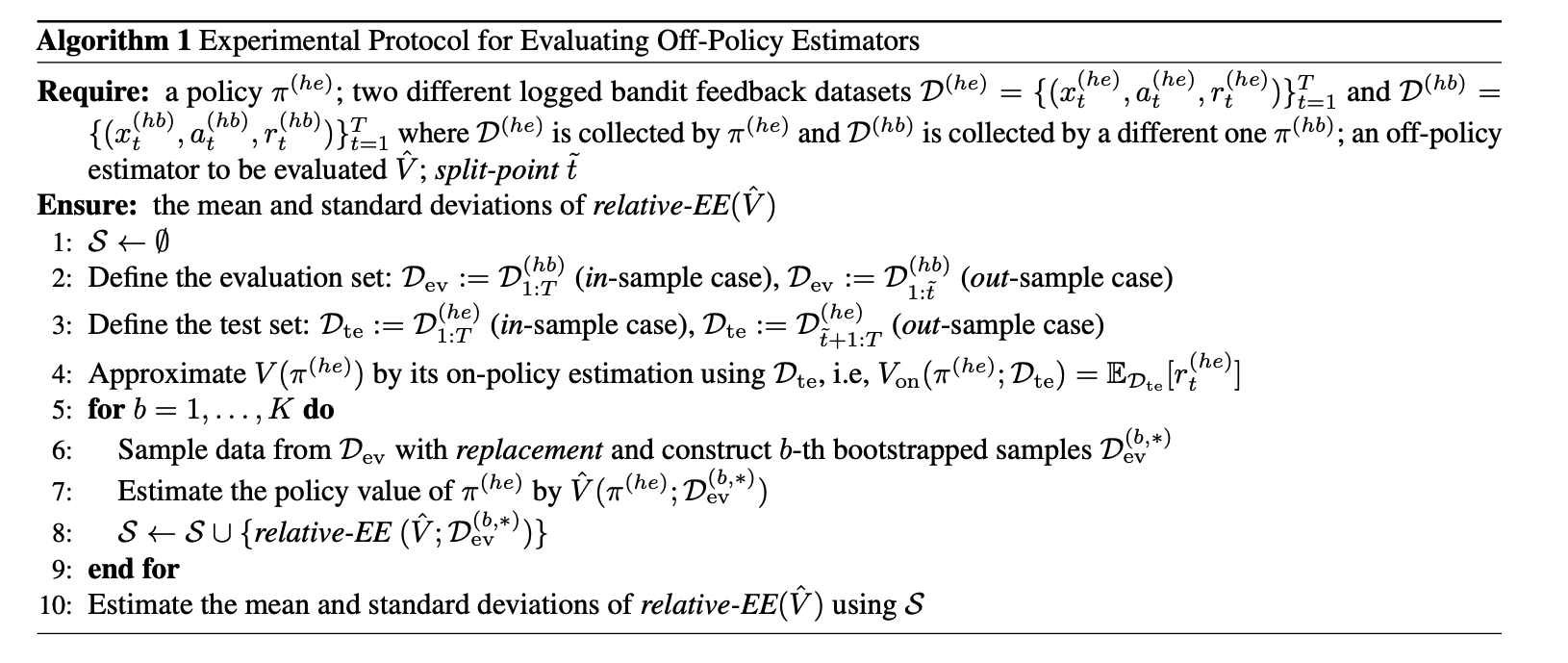

Here we describe an experimental protocol to evaluate OPE estimators and use it to compare a wide variety of existing estimators.

We can empirically evaluate OPE estimators’ performances by using two sources of logged bandit feedback collected by two different policies \(\pi^{(he)}\) (hypothetical evaluation policy) and \(\pi^{(hb)}\) (hypothetical behavior policy). We denote log data generated by \(\pi^{(he)}\) and \(\pi^{(hb)}\) as \(\calD^{(he)} := \{ (x^{(he)}_t, a^{(he)}_t, r^{(he)}_t) \}_{t=1}^T\) and \(\calD^{(hb)} := \{ (x^{(hb)}_t, a^{(hb)}_t, r^{(hb)}_t) \}_{t=1}^T\), respectively. By applying the following protocol to several different OPE estimators, we can compare their estimation performances:

Define the evaluation and test sets as:

in-sample case: \(\calD_{\mathrm{ev}} := \calD^{(hb)}_{1:T}\), \(\calD_{\mathrm{te}} := \calD^{(he)}_{1:T}\)

out-sample case: \(\calD_{\mathrm{ev}} := \calD^{(hb)}_{1:\tilde{t}}\), \(\calD_{\mathrm{te}} := \calD^{(he)}_{\tilde{t}+1:T}\)

where \(\calD_{a:b} := \{ (x_t,a_t,r_t) \}_{t=a}^{b}\).

Estimate the policy value of \(\pi^{(he)}\) using \(\calD_{\mathrm{ev}}\) by an estimator \(\hat{V}\). We can represent an estimated policy value by \(\hat{V}\) as \(\hat{V} (\pi^{(he)}; \calD_{\mathrm{ev}})\).

Estimate \(V(\pi^{(he)})\) by the on-policy estimation and regard it as the ground-truth as

\[V_{\mathrm{on}} (\pi^{(he)}; \calD_{\mathrm{te}}) := \E_{\calD_{\mathrm{te}}} [r^{(he)}_t].\]Compare the off-policy estimate \(\hat{V}(\pi^{(he)}; \calD_{\mathrm{ev}})\) with its ground-truth \(V_{\mathrm{on}} (\pi^{(he)}; \calD_{\mathrm{te}})\). We can evaluate the estimation accuracy of \(\hat{V}\) by the following relative estimation error (relative-EE):

\[\textit{relative-EE} (\hat{V}; \calD_{\mathrm{ev}}) := \left| \frac{\hat{V} (\pi^{(he)}; \calD_{\mathrm{ev}}) - V_{\mathrm{on}} (\pi^{(he)}; \calD_{\mathrm{te}}) }{V_{\mathrm{on}} (\pi^{(he)}; \calD_{\mathrm{te}})} \right|.\]To estimate standard deviation of relative-EE, repeat the above process several times with different bootstrap samples of the logged bandit data created by sampling data with replacement from \(\calD_{\mathrm{ev}}\).

We call the problem setting without the sample splitting by time series as in-sample case. In contrast, we call that with the sample splitting as out-sample case where OPE estimators aim to estimate the policy value of an evaluation policy in the test data.

The following algorithm describes the detailed experimental protocol to evaluate OPE estimators.

Using the above protocol, our real-world data, and pipeline, we have performed extensive benchmark experiments on a variety of existing off-policy estimators. The experimental results and the relevant discussion can be found in our paper. The code for running the benchmark experiments can be found at zr-obp/benchmark/ope.